Deep learning with quite a few parameters could possibly be very extremely efficient and customary in a lot of functions. In 2012 the success of AlexNet made deep learning widespread among the many many AI neighborhood. However, overfitting and gradual learning are extreme points in these deep neural networks.

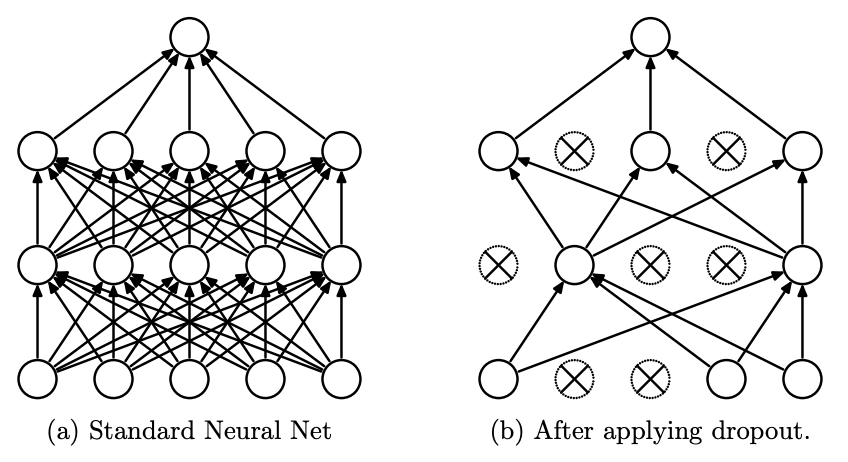

Throughout the 2012 Alexnet paper researchers used a model new regularization methodology, Dropout to reduce the overfitting of the model. Overfitting makes a model memorize the teaching data barely than determining the generalized development. This ends in poor effectivity in unseen data. On this methodology, we randomly ignore the neurons with a certain probability all through the teaching course of. Actions of the rest of the neurons are scaled as a lot as compensate. This stops neurons from co-adapting and creates a thinned group, learning large buildings of information. In the middle of the check out time, no neurons are dropped.

Neural nets may be taught very superior buildings of information. However, with restricted teaching data, these superior buildings are usually the sampling noise. Many regularization strategies from stopping teaching correct after the cross-validation check out outcomes start becoming worse to L1, L2 regularization and weight penalties have been used sooner than Dropout.

Using utterly completely different fashions and averaging outcomes is the optimum methodology, nonetheless not surroundings pleasant. In Dropout, that’s achieved successfully by eradicating fashions shortly with their incoming and outgoing connections. The optimum dropout probability tends to be close to 0.5 in a lot of functions.

Dropout creates a model new thinned group, sampled from the distinctive group. A neural group with n fashions could possibly be considered a set of two**n thinned networks.

The neuron web with out dropout is used all through the testing half. If a unit is retained with probability p all through teaching, the outgoing weights of that unit are multiplied by p on the check out half. This combines all the 2**n thinned networks.

Dropout may be utilized in RBMs and graphical fashions.

The motivation for this system acquired right here from sexual evolution precept. In sexual copy, half of the genes come from one mum or dad and the remaining come from the alternative mum or dad, allowing random mutations and breaking co-dependancies.

This image from the distinctive paper summarizes the feed-forward step of dropout. “r” denotes a vector of random Bernoulli variables. This random vector is elementwise multiplied with the current output to generate the thinned outputs. Thinned outputs are fed to the following layer. The beneath formulation summarize this course of.

“w” and “b” signify weights and biases. “f” is the non-linear activation carry out.

Stochastic gradient descent with momentum may be utilized in Dropout.

However, in each teaching minibatch a definite thinned group is sampled. Forward and backpropagation are achieved solely on this thinned group. The gradient of each parameter is averaged over each mini-batch. Any teaching case which drops a parameter contributes zero to the gradient.

Constraining the norm of a weight vector from a hidden unit to an larger certain is one different essential regularization methodology. That is named max norm regularization. Using Dropout with extreme momentum and large decaying learning expenses boosts the effectivity.

The distinctive Dropout paper evaluated its effectiveness on 5 numerous image datasets: MNIST, SVHN, CIFAR-10, CIFAR-100, and ImageNet, overlaying utterly completely different image varieties and training set sizes.

Throughout the MNIST data set, dropout confirmed a serious enchancment with error expenses diminished from 1.60% to 1.35%. They fine-tuned their model by rising the group, using max norm regularization and introducing the ReLU activation carry out over the logistic carry out.

Researchers used many different architectures with mounted parameters to indicate the robustness. The beneath graph from the distinctive paper demonstrates the outcomes.

Dropout payment: Researchers plotted check out error payment and training error payment for numerous prospects of retaining a unit. For very low prospects just some of the nodes are expert resulting in underfitting. for prospects 0.4<p<0.8 every curves flatten and when p tends to 1, the check out curve tends to increase displaying overfitting.

Data set measurement: For small portions of information, dropout doesn’t give any enhancements since there are enough parameters to create overfitting. As the size of the knowledge set will improve, obtain from dropout is elevated after which declined. In a given construction there’s a sweet spot the place dropout gives most outcomes.

Dropout is an environment friendly regularization methodology that has the usual of blending plenty of architectures and stopping coadaptations.

However, Dropout tends to increase the teaching time as a lot as 2 or 3 situations that of a traditional neural group of the equivalent construction as a consequence of noisy parameter updates. Each teaching case trains a definite construction. This creates a tradeoff between put together time and generalization.

References

Srivastava, N., Hinton, G. E., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: A straightforward strategy to forestall neural networks from overfitting. Journal of Machine Finding out Evaluation, 15(1), 539–564.

Thank you for being a valued member of the Nirantara family! We appreciate your continued support and trust in our apps.

-

Nirantara Social - Stay connected with friends and loved ones. Download now:

Nirantara Social

-

Nirantara News - Get the latest news and updates on the go. Install the Nirantara News app:

Nirantara News

-

Nirantara Fashion - Discover the latest fashion trends and styles. Get the Nirantara Fashion app:

Nirantara Fashion

-

Nirantara TechBuzz - Stay up-to-date with the latest technology trends and news. Install the Nirantara TechBuzz app:

Nirantara Fashion

-

InfiniteTravelDeals24 - Find incredible travel deals and discounts. Install the InfiniteTravelDeals24 app:

InfiniteTravelDeals24

If you haven't already, we encourage you to download and experience these fantastic apps. Stay connected, informed, stylish, and explore amazing travel offers with the Nirantara family!

Source link