Researchers at Carnegie Mellon School and Olin College of Engineering have explored using contact microphones to teach ML fashions for robotic manipulation with audio data.

Robots designed for real-world duties in quite a few settings ought to efficiently grasp and manipulate objects. Present developments in machine learning-based fashions have aimed to bolster these capabilities. Whereas worthwhile fashions usually depend upon intensive pretraining on datasets stuffed primarily with seen data, some moreover mix tactile information to boost effectivity.

Researchers at Carnegie Mellon School and Olin College of Engineering have investigated contact microphones as another option to standard tactile sensors. This technique permits the teaching of machine learning fashions for robotic manipulation using audio data.

In distinction to the abundance of seen data, it’s nonetheless being determined what associated internet-scale data may presumably be used for pretraining totally different modalities like tactile sensing, which is increasingly more important throughout the low-data regimes typical in robotics functions. This gap is addressed by using contact microphones as a substitute tactile sensor.

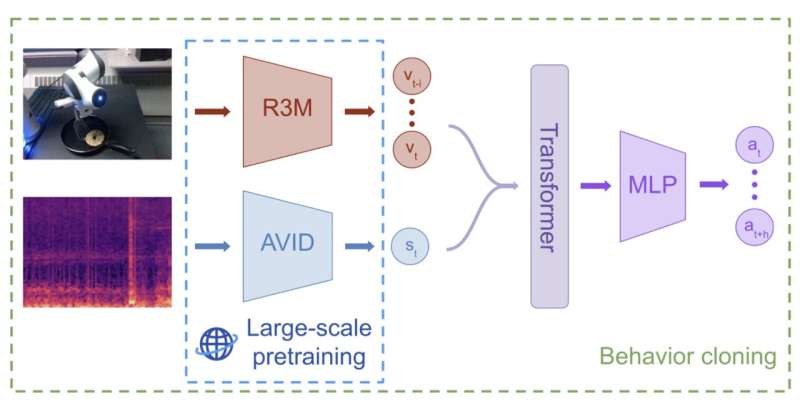

Of their present evaluation, the workers used a self-supervised machine learning model that was pre-trained on the Audioset dataset, which contains over 2 million 10-second video clips that features quite a few sounds and music collected from the net. This model employs audio-visual event discrimination (AVID), a method in a position to distinguishing between quite a few types of audio-visual content material materials.

The workers evaluated their model by conducting exams the place a robotic wanted to full real-world manipulation duties primarily based totally on no more than 60 demonstrations per course of. The outcomes had been very encouraging. The model demonstrated superior effectivity as compared with these relying solely on seen data, notably in eventualities the place the objects and settings numerous significantly from the teaching dataset.

The vital factor notion is that contact microphones inherently seize audio-based information. This permits utilizing large-scale audiovisual pretraining to accumulate representations that enhance the effectivity of robotic manipulation. This system is the first to leverage large-scale multisensory pre-training for robotic manipulation.

Attempting ahead, the workers’s evaluation may pave one of the best ways for superior robotic manipulation using pre-trained multimodal machine learning fashions. Their technique has the potential for added enhancement and wider testing all through quite a few real-world manipulation duties.

Reference: Jared Mejia et al, Listening to Contact: Audio-Seen Pretraining for Contact-Rich Manipulation, arXiv (2024). DOI: 10.48550/arxiv.2405.08576

Thank you for being a valued member of the Nirantara family! We appreciate your continued support and trust in our apps.

-

Nirantara Social - Stay connected with friends and loved ones. Download now:

Nirantara Social

-

Nirantara News - Get the latest news and updates on the go. Install the Nirantara News app:

Nirantara News

-

Nirantara Fashion - Discover the latest fashion trends and styles. Get the Nirantara Fashion app:

Nirantara Fashion

-

Nirantara TechBuzz - Stay up-to-date with the latest technology trends and news. Install the Nirantara TechBuzz app:

Nirantara Fashion

-

InfiniteTravelDeals24 - Find incredible travel deals and discounts. Install the InfiniteTravelDeals24 app:

InfiniteTravelDeals24

If you haven't already, we encourage you to download and experience these fantastic apps. Stay connected, informed, stylish, and explore amazing travel offers with the Nirantara family!

Source link